Get Metrics-Ready - with Your Existing Data Stack

How PowerMetrics’ flexible integrations design meets data teams where their data is

Learn how PowerMetrics enables data teams to create and fulfill metrics from diverse data sources, including data warehouses, semantic layers, service APIs and spreadsheets. Its flexible integrations approach gets data teams started with metrics, no matter what their current data stack looks like. PowerMetrics kickstarts a metrics-first strategy and is designed to evolve with your data stack as it matures. All the while, PowerMetrics will continue to ensure consistent, straightforward analytics across the entire organization. Join us for the webinar on June 18!

At Klipfolio, we believe in a metrics-first analytics strategy. We built PowerMetrics, an approachable metric-based product, to help business users understand their data and make better decisions. The concept of “metrics as data artifacts” is powerful, yet simple: All metrics follow the same rules and expose the same capabilities, regardless of how they’re defined or fulfilled. This unique quality makes it easy to learn how to use and extract real-world value from the data in metrics. This universal approach to data consumption is one of the driving forces behind our metric philosophy.

Compared to consuming metric data in visualizations, creating and fulfilling metrics is part of a diverse, and sometimes complex, environment. The reality for most data teams is that their data is everywhere, stored in many different kinds of storage systems, and exposed through many different types of APIs. There’s no “one size fits all” data stack, and, as such, the way in which metrics need to be defined differs considerably from organization to organization. Adding to this mix - data stacks evolve over time within the same organization as a business grows in its data needs and maturity.

While the concept of metrics is gaining popularity, fulfillment has often been a challenge for data teams. At PowerMetrics, we took on that challenge - and we think we solved it. Read on to learn how you can use PowerMetrics to build accurate, governed, single source of truth metrics with data from data warehouses, semantic layers, and service APIs and spreadsheets (with data feeds). Our integration set will help your data teams build a metrics strategy that grows with you, regardless of the maturity and design of your data stack. We believe PowerMetrics is the most flexible metric platform for modern data stacks available today. After reading this article, we hope you’ll agree!

For an overview and first-hand learning from product experts, join us for our webinar on June 18. Representatives from Klipfolio, Cube, dbt Labs, and Datateer will describe how our flexible integrations approach gets you metrics-ready quickly, regardless of your current setup.

Okay, so we’ve talked about how PowerMetrics can integrate with virtually any data stack. Now, let’s dive into how each option works so you can decide which one (or ones) are right for you and your organization!

Data warehouse metrics

When to use data warehouse metrics:

Your organization has already consolidated its data into a data warehouse

Your organization has the skill set and tooling to create tables or views that are optimized for metric consumption

It’s important to your organization, that the data is stored in a single location in the data stack

It’s now possible to define metrics over a data warehouse (BigQuery, Snowflake, Databricks, Redshift, PostgreSQL and MariaDB) and fulfill them in PowerMetrics - without the need for a semantic layer. Direct-to-warehouse metrics are each defined using a single table or table view within a database. This metric type is ideal for data teams that have already put in place all of the data collection and transformation processes (e.g., using dbt Core) required to consolidate and manage their data in a data warehouse, but have not (yet) adopted a semantic layer technology to model that data.

Each metric connected directly to a data warehouse must reference a single table or view within the warehouse, and that table or view must have at least one date column and at least one non-date column. Defining the metric requires some extra query details, such as the metric’s aggregation strategy and how it should resolve changes of values over time. This may require the data team to transform their data such that a suitable table or view exists to power the metric.

When querying a data warehouse metric in PowerMetrics, the query is converted into a set of simple and universal SQL statements against the table or view used by the metric. The results from these SQL queries are consolidated and made meaningful by PowerMetrics according to the query parameters and the metric definition. Data stays in the data warehouse and is not stored in PowerMetrics except for some short-term (configurable) caching to reduce load on the data warehouse.

Semantic layers

When to use semantic layer metrics:

If you already have a semantic layer configured within your data stack

Your data needs require advanced modeling and query abstraction

Semantic layers, a relatively new concept in the analytics industry, adds a layer of refinement and description over the data stored in data warehouses. A semantic layer allows for a set of modeled artifacts that make it easy for downstream applications to understand the data and use it appropriately. Data, exposed through modeled artifacts in the semantic layer, is ready to use for BI tools, data applications and AI systems, as it’s well-described and clean and builds in meaningful query logic, such as predefined aggregations and functions.

We’re excited (and proud) to announce our new integration with Cube!

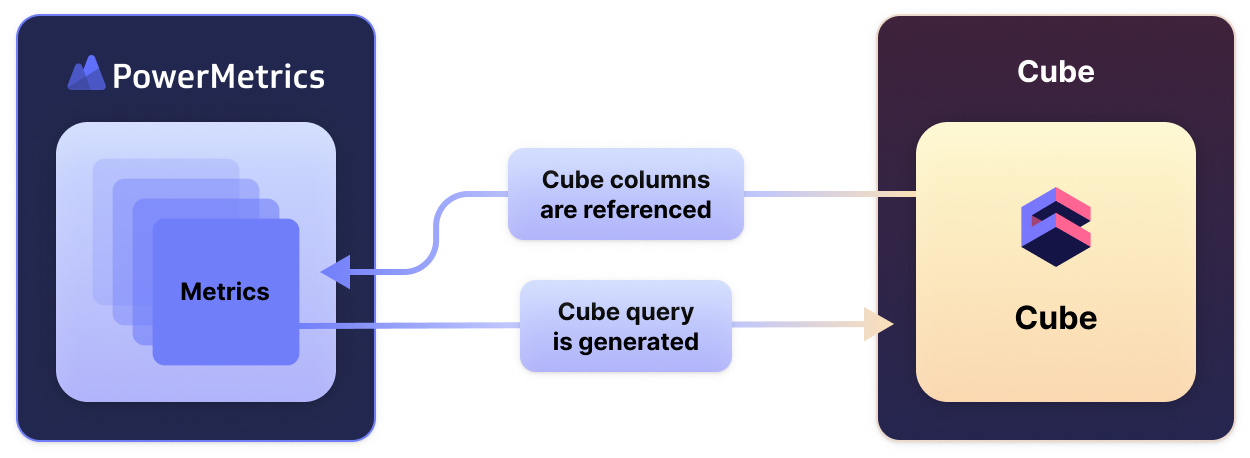

Cubes, managed by Cube, are semantic artifacts that expose fields of various types, from measures to dimensions, all with built-in query logic to protect the meaning of the data within the cube. Querying cubes is easy and doesn’t require advanced query options, such as aggregations or grouping constructs, since they’re built into the cubes.

PowerMetrics enables the creation of metrics by referencing those fields directly within a cube. Using the predefined aggregations logic within the semantic layer, they don’t have to be specified again. When a Cube metric is used in PowerMetrics, all the queries are converted to cube queries and executed against Cube directly. This means that data for these metrics stays in the database that the cube is modeled from. Other than some short-term caching, PowerMetrics does not store any data for these semantic layer metrics.

Semantic layers with a metric layer

When to use metrics layer metrics:

You already have a set of metrics defined in a metrics layer

The metrics you need require very specialized or highly optimized queries

Recognizing the need for semantic layer options, we released our dbt Semantic Layer integration in Q4 2023.

dbt Labs Semantic Layer (dbt SL) is an example of a semantic layer that also provides a metric layer. This design enables data teams to not only model, clean and describe their data using a semantic model, but also to define the metrics directly over that model. dbt SL also provides a metric query API to list and query metrics as individual artifacts.

Because dbt Semantic Layer metrics are powered by a semantic layer, the data is only queried by PowerMetrics and not stored. When a dbt Semantic Layer metric is imported into PowerMetrics, a thin wrapper that defines an extra set of descriptive information, such as formatting and business consumption details, is stored in PowerMetrics that references the dbt Semantic Layer metrics directly.

Data feed metrics

When to use data feed metrics:

The data is extracted from a service API

The data can only be queried for a limited range of time, for example, the last 30 days

The data is in a spreadsheet, csv, or other file format

Your organization does not yet have a data storage system, such as a data warehouse

A lot of data within organizations isn’t stored in data warehouses or modeled in a semantic layer. This may be because the organization hasn’t yet defined their internal data stack, or because the data is extracted directly from service APIs outside their data stack, or stored in documents or file systems. With PowerMetrics, you can define metrics from these types of data sources and use the resulting metrics in combination with any other type of metric.

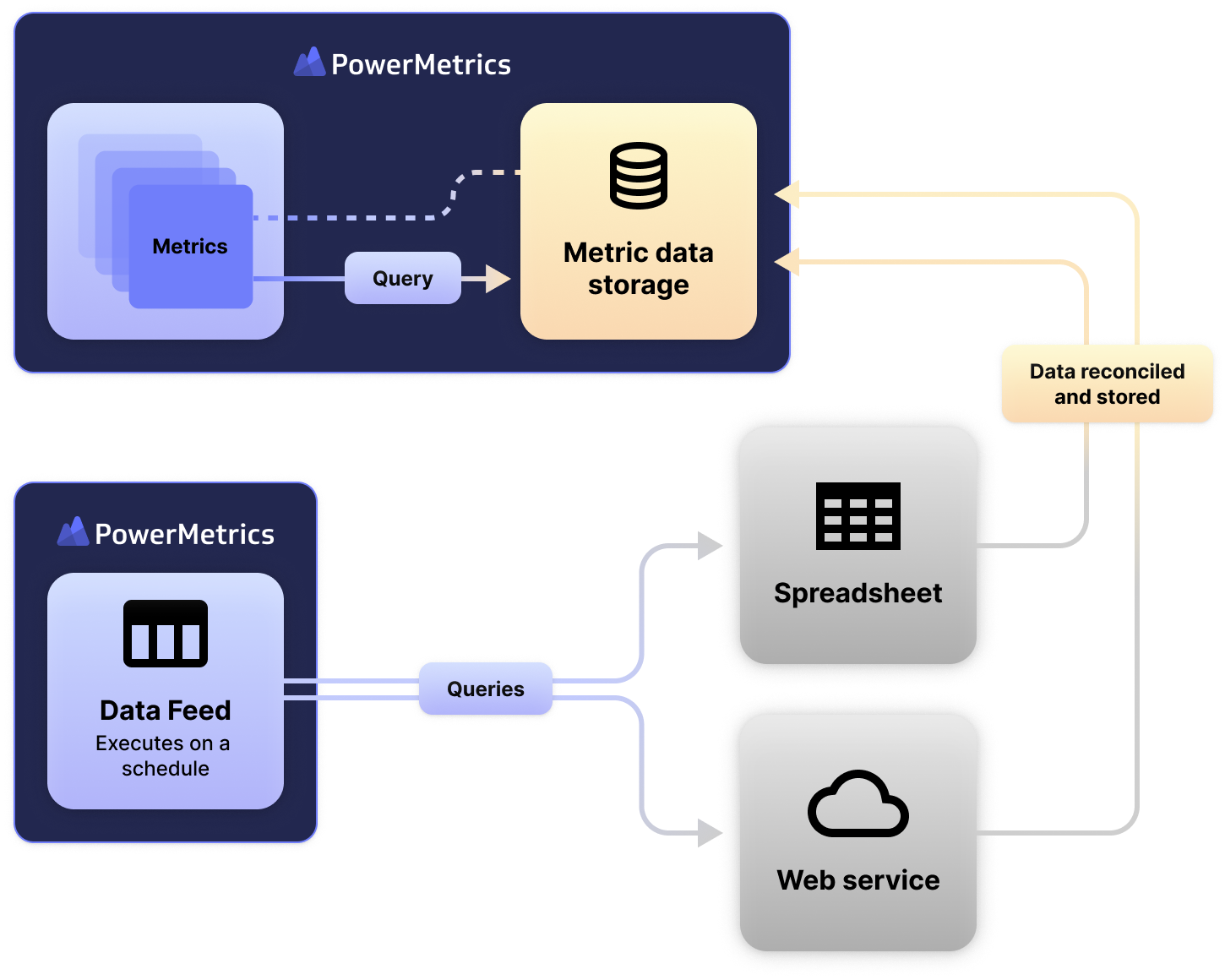

In PowerMetrics, data feeds are an internal modeled artifact that packages together all of the configuration required to query data from its source. Data feeds also include some modeling to transform data into a form that can be consumed and stored in a metric. Data feeds are defined and managed directly in PowerMetrics. Each data feed is typically scheduled to execute (refresh) on a regular basis so new data can be ingested into the metrics created from it.

A data feed metric is defined from a feed in much the same way as a data warehouse metric is defined from a table - by choosing the columns from the feed to be used as the values, dimensions and date information in the metric. Much like a data warehouse metric, these metrics also need to define some aggregation and query rules over the data stored in the metric. A key difference from data warehouse metrics, however, is that a metric based on a feed stores its own data within a proprietary data storage, adding to that data each time the feed is executed to get new data. This means the data is stored within PowerMetrics for these types of metrics. This design is ideal for extracting and building a history of the metric values over already processed and aggregated data, such as that exposed by service APIs like Google Analytics.

Data feed based metrics continuously ingest new data each time the feed is executed. A set of sophisticated data reconciliation strategies are built into PowerMetrics to prevent overlapping data from being double counted within the metric, while also allowing for data to be corrected by more recent ingestions.

A common use case for data feeds is bringing in and modeling file system data, such as that from spreadsheets (e.g., Excel, GSheets, Smartsheet, Airtable) so it can be consumed by metrics. Updating the spreadsheet and executing the feed updates the metrics, taking advantage of the same type of reconciliation strategies used when the feed execution is scheduled.

Calculated metrics

When to use calculated metrics

The metric can be expressed as a formula over more basic metrics, for example, (Profit = Revenue - Expenses)

The metric can only be expressed as a formula over the post-aggregated results from its operands

The operands are metrics defined from different sources, or even different types

Calculated metrics are unique in that they don’t access data directly. Instead, they’re defined through a formula using other metrics as the operands within that formula.

The formula for a calculated metric is executed on the post-aggregated results of its operand metrics. These operands can be metrics of any type, a mix of types or even other calculated metrics. Calculated metrics are an easy way to expose more metrics to the metric consumer by building on the existing artifacts in the system.

Some types of calculations can only be expressed correctly as a calculated metric. A common example is a ratio, where the correct ratio value is the ratio of the aggregated results from the operand metrics, not the aggregation of the ratios of the unaggregated data.

How a Hybrid Integrations approach evolves with the growing needs of the data team

If you’re still not sure what type of metric is right for you, don’t sweat it. Just define your metrics based on your current data stack. You can always redefine them later if your data needs change. Whether you start creating and fulfilling metrics from a data warehouse, a semantic layer, service APIs, or spreadsheets, PowerMetrics, and its centralized catalog of metrics, will continue to provide you with the same reliable, rich consumption experience. You’ll never be locked into a single data strategy or data architecture. PowerMetrics will always meet you where your data is and give you trusted metrics for easy self-sufficient exploration.

For an overview and first-hand learning from product experts, join us for our webinar on June 18. Representatives from Klipfolio, Cube, dbt Labs, and Datateer will describe how our flexible integrations approach gets you metrics-ready quickly, regardless of your current setup.